The download links lead to ZIP files.

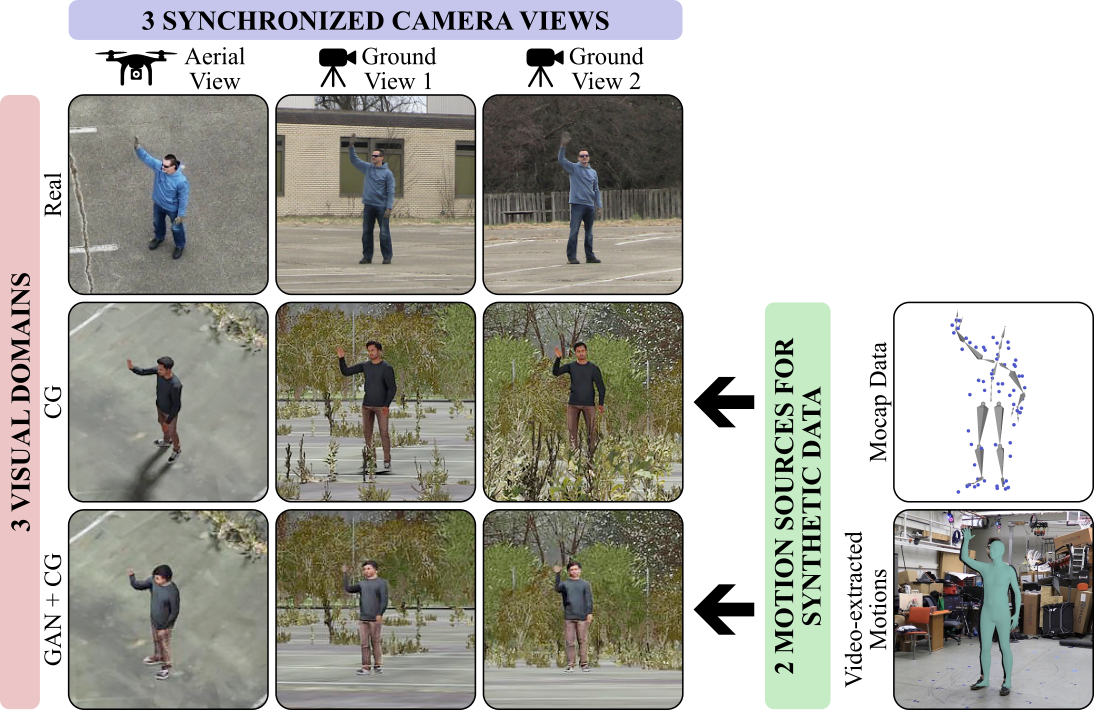

Real Data

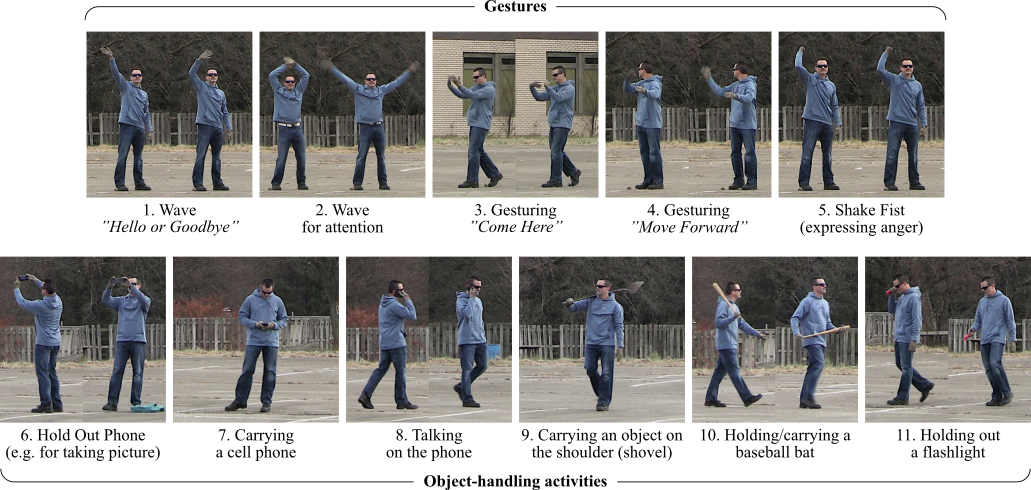

11 activities | 24 subjects | 1.5k sequences | 1.8M frames

-

Aerial View (6.52GB, MD5: cadb0d24d595cdca65ba4d16c6c14109)

Google Drive | Alternative Hosting -

Ground View (28.54GB, MD5: 445e66b91ecf68390f4505bebee382b2)

Google Drive | Alternative Hosting

Synthetic Data

| Human Characters Motion Source | |||

|---|---|---|---|

| Motion Capture Data 11 Activities | 26 subjects | VICON |

Video-based Motions 5 Activities (gestures only) | 15 subjects | VIBE |

||

| Rendering Method | Computer Graphics Blender |

[SynCG-MC] 25.4k sequences | 31.4M frames Aerial View (152.93GB) MD5: a7eec0f4576242d188e74ee10ebc877e Google Drive | Alternative Hosting Ground View (418.78GB) MD5: ca2955cb44a3ac3c074e53406745f41c Google Drive | Alternative Hosting |

[SynCG-RGB] 6.1k sequences | 5.0M frames Aerial View (24.43GB) MD5: f5657e247de7b74a83ab4df79e1b33e5 Google Drive | Alternative Hosting Ground view (67.15GB) MD5: 1cd58dc521c532eaf7a994bffdef62ff Google Drive | Alternative Hosting |

| Neural Rendering + Computer Graphics Liquid Warping GAN + Blender |

[SynLWG-MC] 25.4k sequences | 31.2M frames Aerial View (91.02GB) MD5: 3a8ae0c7a539a4e52ff3e112fe9f9af9 Google Drive | Alternative Hosting Ground view (213.67GB) MD5: 68a3cdb8019b08fff3cb7d2b9bc05576 Google Drive | Alternative Hosting |

[SynLWG-RGB] 6.1k sequences | 5.0M frames Aerial View (14.52GB) MD5: f04b912914478515bdbebec84b27b901 Google Drive | Alternative Hosting Ground view (34.86GB) MD5: c00dfd92321415b42704bb2e4b6d9cbe Google Drive | Alternative Hosting |

|